• Physics 17, 136

Researchers leverage synapse-level maps of the fruit fly brain to examine how neuronal connection probabilities vary with distance, offering insights into how these neuronal networks may optimize function within spatial constraints.

Brains perform astounding feats of computation and communication, all while balancing tight physical and biological constraints. Take, for example, a relatively simple organism like the fruit fly. The brain of a fruit fly is no larger than a poppy seed, containing some 130,000 neurons and a few tens of millions of synapses. Despite its tiny size, this neuronal network supports complex functions, from navigating diverse environments in search of food to engaging in courtship rituals—and occasionally annoying humans. How are these neuronal networks able to operate so well within the inherent spatial constraints? Understanding the organization and the workings of these and other neural systems is a key endeavor, spanning decades of research across neuroscience and physics. A recent study by Xin-Ya Zhang at Tongji University, China, and colleagues takes a step in this direction, reporting a scaling relationship that links neuronal connection probabilities to physical distance in the fruit fly brain [1]. This observation, made across different developmental stages of the fruit fly, could explain how these neuronal networks achieve optimal function within the brain’s inherent geometric constraints.

Researchers have long studied the brain’s macroscale structure and dynamics, but only recently have advances in electron microscopy and image reconstruction made it possible to build large-scale datasets of the brain’s cellular structure across species. These large-scale experiments open up new opportunities to uncover underlying principles of brain-network organization and function using quantitative tools from mathematics and physics [2]. Since form often reflects function in biology, these maps of neurons and their synapses, called connectomes, may hold valuable clues about how the brain operates. Connectomes are inherently spatial [3], and the organization of these neuronal networks is shaped by physical constraints within the brain.

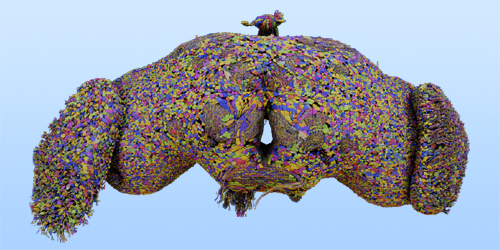

Zhang and colleagues examined brain-wide, synaptic-resolution connectomes of the fruit fly at both larval and adult stages (Fig. 1) [4]. They investigated how the probability of two neurons being connected by at least one synapse varies with the distance between their cell bodies. The team reports that, across both developmental stages, this connection probability between pairs of neurons falls off with distance according to a power law. This finding is consistent with studies of heavy-tailed distributions in coarser-resolution data from other animals [5], but the fruit fly connectomes exhibit greater numbers of long-range connections.

What could be the significance of such a distribution? The researchers put forth two hypotheses. First, this distribution may maximize the information communicated under a cost constraint. Second, this distribution may enable the brain to strike an optimal balance between segregation (the localization of coordinated neural activity in specific regions) and integration (the distribution of neural activity throughout the brain).

Since the connectome forms the scaffold for communication of information between neurons, its geometry and topology directly influence the information transmitted [6]. To quantify communication, the researchers compute the average information entropy, which reflects the diversity of information a neuron can send or receive. To maximize this entropy, it is preferable to have long-distance links that can obtain information from new parts of the network [7]. For neurons, this could mean receiving inputs from diverse brain regions, including different sensory modules. However, communication along such long-distance links is metabolically expensive [6, 7]. Zhang and colleagues find that with a fixed energy budget (or constraint on total path length), deviating from the empirically reported power-law exponent reduces both the sending and receiving entropy. This finding suggests that the spatial distribution of connections may optimize the diversity of information propagated along the connectome for a fixed energy budget.

Next, to examine the consequence of this spatial relationship on the overall functioning of the network, the researchers simulate dynamical models of neuron activity. Previous research has established that brain networks consist of functional modules that balance segregation and integration of activity in the network [8]. Measuring the covariance of temporal activity between neurons, the researchers quantify the extent to which neurons engage with each other across diverse modules in the network. They find that adding deviations from the observed power-law distribution—either by varying its parameters or introducing an exponential character—destroys the balance between segregation and integration in the network.

Finally, Zhang and colleagues use their findings to put forth a model to predict neuronal connectivity. First, they use a machine-learning algorithm to predict the presence of connections between pairs of neurons based on just five parameters: the distance between the neurons and the number of incoming and outgoing connections at each neuron. These features predict the connectome with high accuracy, suggesting that it may be generally possible to predict the connectome using simple rules. Then, using the three most important features predicted by the algorithm—the distance, the incoming connection number at one neuron, and the outgoing connection number at the other—the researchers construct a simple model to predict the connection probabilities. This simple model agrees well with the empirical data and achieves comparable accuracy to the machine-learning model.

What comes next? While the present study measures distances between the main bodies of the neurons, it would be valuable to account for the spatial morphology of neurons in calculations, as some neurons have long axons that extend across distances. It would also be interesting to see to what extent these findings are valid in larger and more complex brains. Additionally, investigating the role and specificity of the long-range connections identified in this work—such as whether they connect distinct brain regions—could link the work more directly to the neurobiology of the organism. Finally, this work, together with recent findings of neuromorphic features in spatially embedded artificial neural networks [9], opens up exciting possibilities for artificial intelligence inspired by brain geometry.

References

- X.-Y. Zhang et al., “Geometric scaling law in real neuronal networks,” Phys. Rev. Lett. 133, 138401 (2024).

- S. Kulkarni and D. S. Bassett, “Towards principles of brain network organization and function,” Annu. Rev. Biophys. (to be published) (2025), arXiv:2408.02640.

- M. Barthélemy, “Spatial networks,” Phys. Rep. 499, 1 (2011).

- S. Dorkenwald et al., “Neuronal wiring diagram of an adult brain,” bioRxiv 379 (2023); M. Winding et al., “The connectome of an insect brain,” Science 379, eadd9330 (2023); L. K. Scheffer et al., “A connectome and analysis of the adult Drosophila central brain,” eLife 9, e57443 (2020).

- L. Magrou et al., “The meso-connectomes of mouse, marmoset, and macaque: Network organization and the emergence of higher cognition,” Cereb. Cortex 34, bhae174 (2024).

- C. Seguin et al., “Brain network communication: concepts, models and applications,” Nat. Rev. Neurosci. 24, 557 (2023).

- Y. Hu et al., “Possible origin of efficient navigation in small worlds,” Phys. Rev. Lett. 106, 108701 (2011).

- M. A. Bertolero et al., “The modular and integrative functional architecture of the human brain,” Proc. Natl. Acad. Sci. U.S.A. 112, E6798 (2015).

- J. Achterberg et al., “Spatially embedded recurrent neural networks reveal widespread links between structural and functional neuroscience findings,” Nat. Mach. Intell. 5, 1369 (2023).