Recently, I polled y’all on whether ecological studies have improved over time in one specific, quite basic respect: sample size. Here are the poll results, along with the answer. Both of which are given away in the post title: most poll respondents think that sample sizes have increased over time in ecology. Most poll respondents are wrong. (Sorry most poll respondents!)

Poll results

We got 101 responses; thanks to everyone who responded! 68% of respondents guessed that ecological sample sizes have increased over time, vs. 32% who guessed that they haven’t (“not sure” wasn’t an option).

I also asked respondents how confident they were in their guesses. The majority (54%) were a little confident, meaning that they thought their guesses were a bit better than a coin flip. 22% were somewhat confident, meaning that they’d be a bit surprised to be wrong. 16% were not at all confident. Just 9% were very confident, meaning that they’d be shocked to be wrong. I appreciate that our readers are circumspect. 🙂

There was no association between respondents’ confidence in their guesses, and the accuracy of their guesses. I note in passing that, among very confident respondents, incorrect guesses outnumbered correct guesses 7:2.

The poll offered respondents the opportunity to explain why they guessed as they did. Numerous respondents commented to say that technological advances like remote sensing likely have increased our largest sample sizes. But commenters differed in whether they thought those technological advances were sufficiently widespread to lead to a general increase in sample size, or whether they’ve merely stretched out the upper tail of the sample size distribution. A few commenters also wondered if changes in publication practices, such as the advent of unselective journals like Plos One, mean that we’re also publishing more small sample studies than we used to. Here are a few of the comments to give you the flavor:

I think the range has likely increased, but the growth of ecology publishing means we are probably publishing as high a proportion of small-sample size studies as ever, leading to no overall change.

I’m imagining that a decent number of massive studies (e.g., correlating abundance and environment across thousands of forest inventory plots) that are more common now than before could pull up a mean, even if something like a median doesn’t change as much (we still do a great many studies of modest size)

GPS collars on free-ranging animals aside, most data sets that I am handed have a bare minimum sample size to make any conclusions (whether it’s numbers of wetlands sampled, transects surveyed, animals captured, etc.). I think more funding has led to more studies, but funders for any given study don’t pour more money into increasing sample sizes.

My guess is that mean sample size has increased due to a few studies having larger sample size (i.e. a progressively larger span of N with time) – not sure the median or mode has changed.

Also, I was amused by the last sentence of this comment:

I would think that as ecology has grown and developed as a field (and presumably acquired more funding to conduct larger or longer projects), sample sizes would also increase. Even more so with the advent of big data. But now I’m wondering if this is less straightforward because I’m being polled about it 🤔

Our readers know us. 🙂

Ecological sample sizes have not increased over time

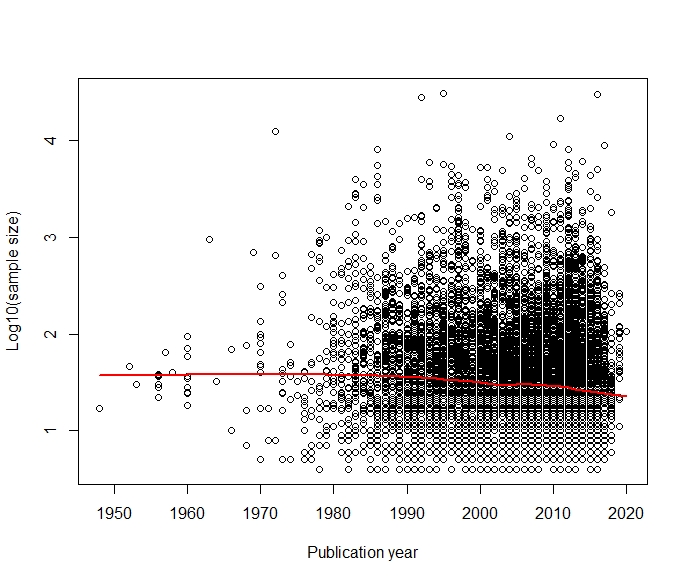

If we’re restricting attention to “sample sizes of 16,000+ correlation coefficients that were later included in meta-analyses” (which I told poll respondents I would be doing), sample sizes have not increased over time. In anything, just the opposite! Here’s a plot of the (log-transformed) sample sizes of those 16,000+ correlation coefficients, vs. their year of publication. The red line is a LOWESS smoother. Many points are identical and so not visible:

Note that, with 16,000+ observations, we definitely don’t need to worry that we lack the statistical power to detect an increasing trend.

Before you hassle me about alternative graphical or statistical approaches: I tried them all, they all give the same answer. A linear regression gives the same answer. Pearson’s correlation gives the same answer. A linear quantile regression (50th quantile) gives the same answer. A LOWESS smoother with a narrower or wider window than the one in the plot above gives the same answer. Calculating a separate correlation between sample size and publication year for every meta-analysis, and then looking at the distribution of those 134 correlations, gives the same answer (the mean, median, and mode of the distribution are all slightly negative). A linear regression with a hierarchical error model to allow for non-independence of correlations reported in the same paper gives the same answer. Binning the data by decade and plotting a separate boxplot for each decade gives the same answer:

Now, central tendency isn’t everything. Many commenters–and me!–suspected that very large sample sizes might’ve become more common over time, but without moving the median or the mode all that much. I decided to check that. I binned the data by decade of publication, lumping together everything before 1980, as in that last figure above. Then I estimated skewness, and the 5th, 10th, 90th, and 95th quantiles, for each decade. The sample size distribution is indeed very right-skewed in every decade, as you can tell from that last figure above (remember that the y-axis is log-transformed). But there’s no systematic trend over time in skewness, or in the 5th, 10th, 90th, or 95th quantiles. So no, it is not the case that our upper-tail sample sizes have increased. At least not in this dataset. In absolute terms, we are indeed publishing more correlation coefficients with sample sizes of (say) 1000+ than we did several decades ago. But that’s because we’re publishing more correlation coefficients of any given sample size than we did several decades ago.

In case you’re curious, I did have a closer look at a few of the studies that reported correlation coefficients based on 10,000+ observations. Broadly speaking, they are indeed the sorts of studies that you’d expect to have giant sample sizes. Genetic studies with data on thousands of loci. Long-term compilations of many thousands of observations of organisms over several decades (e.g., Naef-Daenzer et al. 2017). Etc.

Finally, I can tell you that the 134 meta-analyses from which I obtained those 16,000+ correlation coefficients covered all sorts of ecological topics. I don’t know that those 134 meta-analyses are a perfectly representative sample of everything ecologists study. But 134 meta-analyses is a reasonably big sample of meta-analyses. And those 134 meta-analyses are not heavily biased towards topics or subfields that tend to have particularly small sample sizes, or that don’t make use of new technologies, or etc.

So there you have it. Sample sizes in ecology are not increasing over time. If anything, the typical sample size is slowly declining. And technological advances aren’t making really big ecological studies any more common than they used to be, at least not relative to smaller studies.

No doubt there’s room to quibble with this result. For instance, maybe this dataset omits (say) a ton of remote sensing studies studies with giant sample sizes, because remote sensing data typically don’t get summarized as correlation coefficients that later end up in meta-analyses. Or instead of remote sensing, maybe this dataset is omitting a bunch of huge citizen science datasets, or a bunch of huge GPS collar datasets, or whatever. But I kinda doubt it. I feel like anything this dataset omits is likely to be a small part of ecology writ large. Saying that “sample sizes have increased dramatically for a few narrowly defined ecological research topics that aren’t included in this dataset” is basically just another way of saying “sample sizes in ecology haven’t increased.”

By the way, anyone who reads this blog sufficiently avidly (and has a sufficiently good memory) already knew what today’s post would say. 🙂 I posted data on a very closely-related question–are sampling variances changing over time in ecology–just a couple of years ago! There was no trend. As an aside, that old post includes not only all the effect sizes in today’s post, but a lot of additional data, from meta-analyses that use some other effect size measure besides z-transformed correlation coefficients: a total of 111,000+ effect sizes from 466 ecological meta-analyses! Which is a big reason why I believe the results in today’s post. The results in today’s post line up with what we already know from a closely related analysis of an even bigger and broader dataset.

So here’s my question for you: should I write a short paper on this? On the one hand, I’m as open as the next person to seizing opportunities for quick papers, so long as they’re sufficiently interesting. And writing a short paper on this wouldn’t take an appreciable amount of time away from anything else I could do instead, so the opportunity cost would be low. On the other hand, I’m honestly unsure if this result rises to the level of “sufficiently interesting to be worth publishing.” On the third hand, the fact that about 2/3 of the poll respondents didn’t expect this result makes me wonder if it’s a more interesting result than I had originally thought. What do you think? Should I write this up for publication? Take the poll below!

p.s. Now I’m wondering about other fields. Are there any large fields of science for which typical sample sizes have increased appreciably over time? The only obvious answer that occurs to me are “omics” fields, specifically evolutionary genomics. So long as “sample size” means things like “number of loci,” or “number of base pairs,” not “number of individual organisms.” You have to be careful how you define “sample size” when you ask if it’s increasing over time. For instance, in neuroscience we now have fMRI machines that will return estimates of brain activity at each of many thousands of locations (“voxels“) in a brain. But neuroscientists don’t ordinarily run many thousands of individual people through fMRI machines. So if the appropriate measure of “sample size” is number of brains sampled, rather than number of voxels within a brain, I doubt that sample sizes in neuroscience have increased massively.